Tasks on hold

What are tasks on hold?

Our labellers are given the option to skip tasks when they are unable to confidently label the tasks based on the instructions provided. Tasks are typically skipped when:

- The labels in the project setup do not match the labels listed in the instructions guide.

- The instructions do not cover certain edge cases or scenarios in your dataset.

- There is an error with the image and the labeller is unable to identify what needs to be annotated.

Tasks are only put on hold when two labellers in a row have skipped the same task. Each labeller can only skip a limited number of tasks in a day.

Access tasks on hold from the View Tasks page

Steps to address tasks on hold

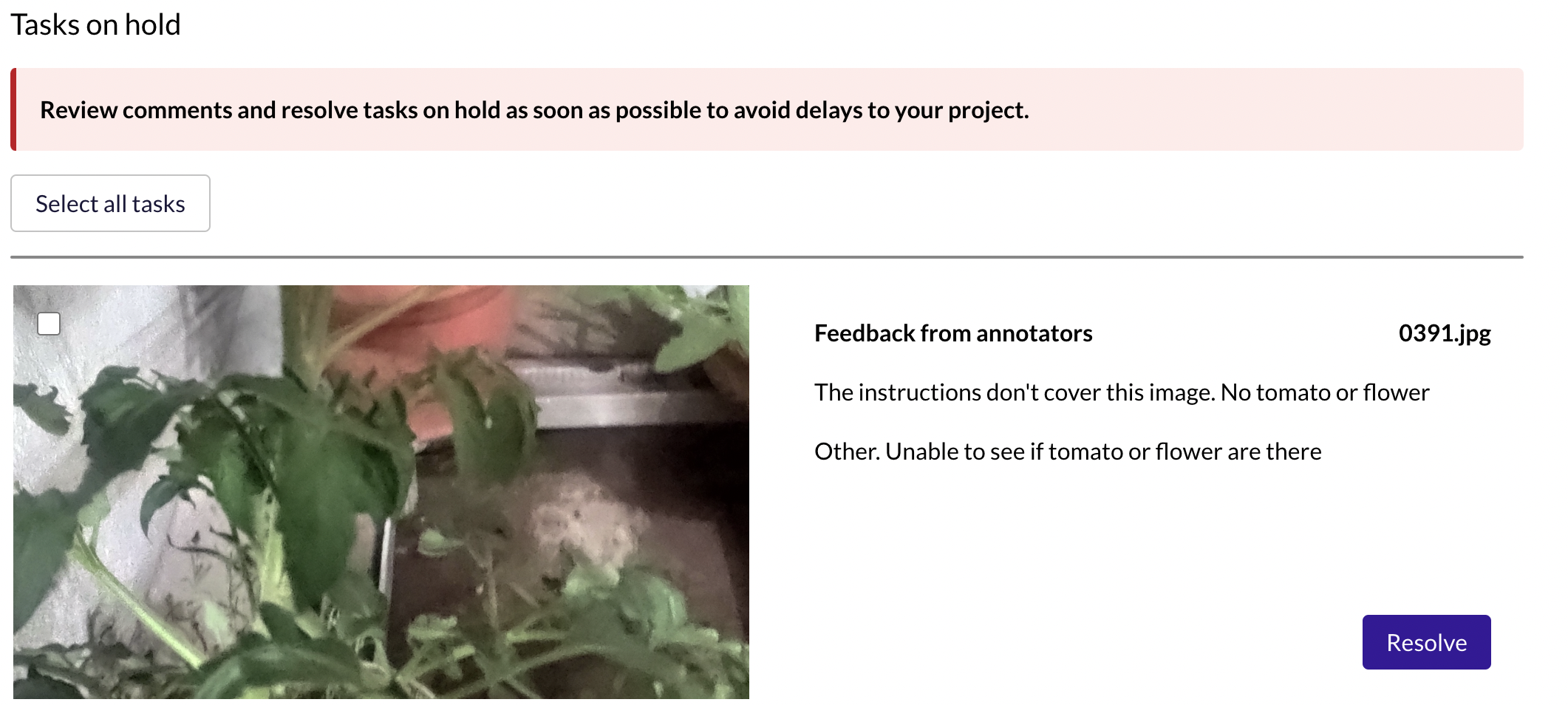

- Review the feedback on each task on hold.

- If the project is ongoing, you can Pause the project temporarily while you update your instructions.

- Update your project setup or instructions to address the concerns raised.

- Save your updated instructions.

- Select and Return the tasks on hold back to the task queue to be labelled with the new instructions.

You can return tasks individually, or select all and return them to be annotated by our labellers.

What if I don’t return tasks on hold back to the task queue?

- These tasks will not be labelled by our labellers.

- Unreturned tasks will still be included in any exported output data. However, no annotation data will be present.

- You will not be charged for these tasks.

Implementing labeller feedback

Here are some key ways you can update your instructions based on feedback received:

| Labeller feedback | Ways to update your instructions |

|---|---|

| The instructions don’t cover this image. “Couldn’t find ‘Rotten tomato’ label even though it’s in the instructions” “No Rotten tomato label” | - Either update the project setup to add in the label needed, - Or if you don’t need that label in this project, clarify in your instructions that this item does not need to be labelled. |

| The instructions don’t cover this image. “Looks like a cap but there’s no bill or brim. Unsure if it should be ‘cap’ or ‘cowboy hat’ or ‘other’?” “Not sure - looks like fez cap but there’s no label for fez?” | - Use this image in your instructions as an example and clarify whether it should be annotated. - If labellers are confusing it for a different label (ie caps), you could also add clarification in the description of that label that "fez hats that look like this" (insert image) should not be labelled as "caps". |

| There is an error with the image. “The image is not loading, I am not quite sure if this is an error or there's really no image.” “No image?" | - If the image is displaying properly when you are reviewing the skipped task, you should be able to return the task to the queue without issues. - If the image isn’t displaying for you either, you may need to check if that image has been corrupted or try to reupload that image. |

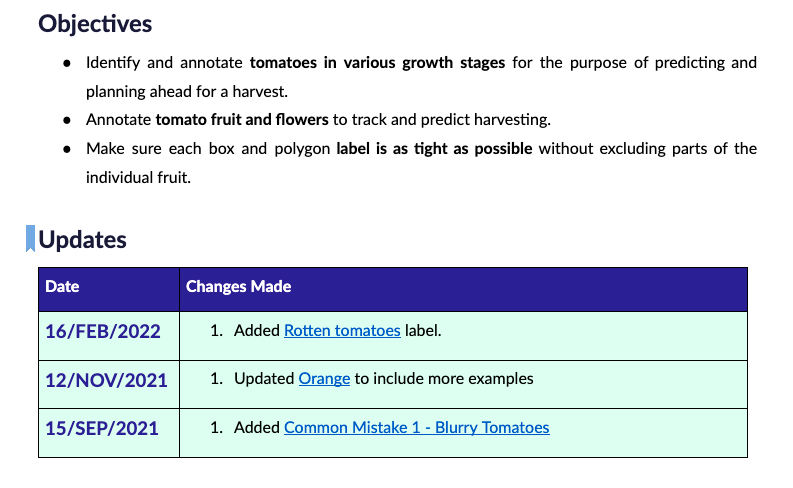

After you’ve updated your instructions, you can add a link in the Updates section of your instructions document so that labellers are able to see new updates at a glance.

You can include a quick description of what the newest updates are. How to set up links and bookmarks in Google Docs.

For more help on writing labelling instructions, read here.

Updated over 1 year ago